Like all other areas of SEO, local SEO is an ever-evolving entity. The last few years in particular have seen huge changes in the tools and techniques businesses need to use to rank well for location-specific searches.

Another part of the problem is the advice and discussion on the topic. 2014 in particular saw most advice and discussion on local SEO center on Google Places for Business and Google+ Local.

Now, I’m not saying that these aren’t important – that would be stupid, right? What I’m saying is that there are many other elements that seem to have been given more importance since the “Pigeon” update last year.

So, where should SEOs and business owners be focusing their efforts? Are there still “quick wins” to be had? Here are my five tips for helping you, or your clients, get more local visibility in 2015.

1. Think Visibility, Not Rankings

So much of the discussion around local rankings has centered on position rather than visibility. If your listing and website ranks top you’ll get the majority of the traffic, right? Not necessarily.

Visibility is about controlling and dominating search real estate. If your local listing ranks top, with your website just below the fold, that might not be enough. What if your competitors are ranking with images on a visually led search and using AdWords to secure above-the-fold real estate with locally relevant extensions, reviews, and social integrations? Even if they rank below your primary Web properties, they will have far more visibility, garner more trust, get more visitors, and probably convert more.

2. Google Places for Business and Google+ Local

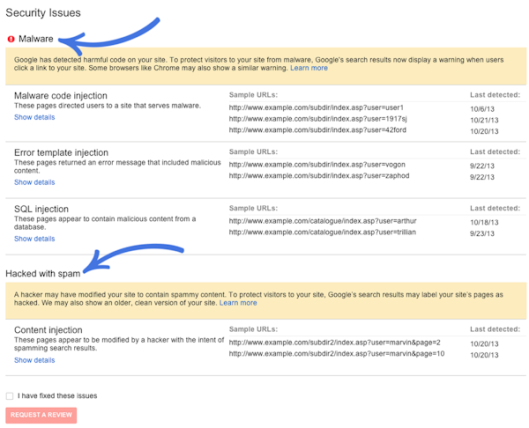

Having said all that, you shouldn’t ignore Google’s suggested channels for ranking your business. They still dominate the primary real estate in any locally oriented search. So, how do you ensure your listing is presented as often, and in the best possible way, as possible?

In the past, it’s all been about reviews and citations. That much hasn’t changed. What is worth considering is the general shift toward ranking signals that can’t be gamed. This means it’s a very reasonable assumption that reviews will soon get more weight than citations.

Reviews are notoriously difficult to get. However, Google is making it far easier with every new integration it introduces between its various services. Take advantage of your audience on any Google-owned property, whether it’s a Google+ community, YouTube followers, or Gmail contact list, if you are asking people to leave a review when they’re already logged into their Google account, it’s literally a couple of clicks. Make it seamless and they are more likely to take two minutes to help you out.

3. Get the Basics Right

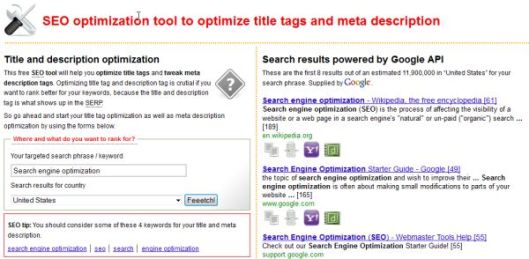

Though the standard of local SEO is generally increasing, there are still so many businesses that aren’t getting the basics right. One of the most common failings I spot is the omission of a custom meta description.

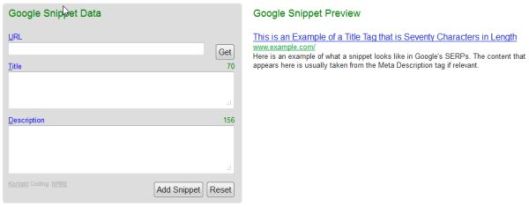

This is another area where businesses are throwing away chances to attract new visitors by focusing on their rank rather than how they are perceived in the SERPs. Ranking number one only gets you in the shop window. If your meta description is an isolated section of your page’s opening paragraph, and your competitors at number two and three have carefully crafted their snippets to make visitors want to click, you’re going to lose out.

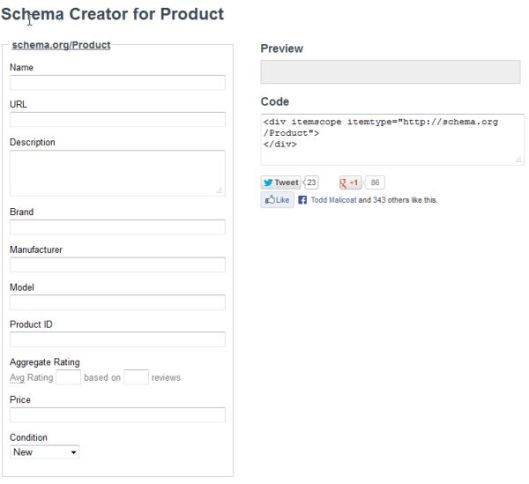

Another area where businesses fail to get the most out of their ranking and listing is by ignoring the importance of schema markup. How often have you clicked on a SERP listing because it has five yellow stars next to it? Well, your customers are the same. Get these small things right and you’ll see an immediate surge in local search traffic, even without increasing visibility at all.

4. Citations

Yes, citations are still important. Even though they can be easily gamed, they still represent a trustworthy indicator of where a business is located. If you’ve got 200 citations that all tell Google that you’re based in the same place and have the same address, in the same format, they are going to trust that information and reflect it in their SERPS.

There are now lots of different tools for identifying and maintaining your local citations on the Web. The most recent of these is Moz Local. These let you manage all of your online business directory listing from one place. These tools will push your business details to all relevant citation sources and make sure it’s always presented in exactly the same format as your Google listing.

5. Do Your Other Properties Rank?

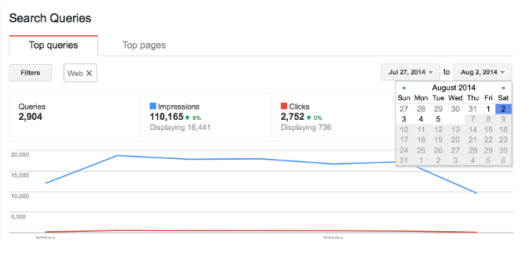

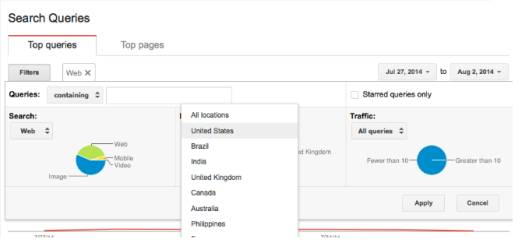

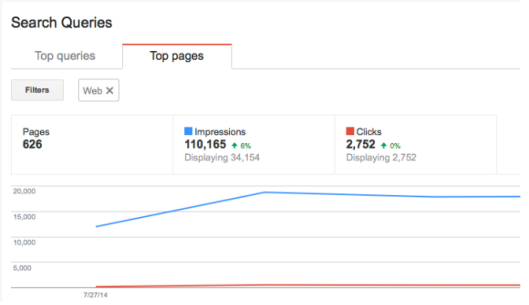

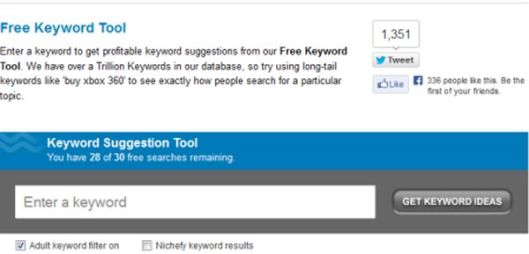

As I’ve already mentioned, it’s best to approach local SEO with the aim of increasing your visibility in your key search results, rather than focusing on the rank of one or two properties. So, which other properties should you be focusing on in order to achieve this? Well, this depends on your search terms.

As you know, the perceived relevance of particular properties will vary depending on the search term itself. If it’s a visually led search, you will probably get images ranking highly. If video is particularly relevant, you’ll see videos high up in the SERP. One of the best ways to work this out is to simply carry out searches using your key terms and take note of what shows up. This should tell you what type of media does well, but also what your competitors are doing. This is a great way to focus your efforts on the exact properties that Google deems relevant to your search. Do their Yelp listings sit below their website and places listing? What about their social properties? Are they getting good visibility? If so, which ones? If you can focus on developing these properties; making sure you have as much information on there as possible you will start to see broader visibility for your search. Another, often ignored, way to increase the visibility of these other properties is to do everything you can to help them rank. Though this seems counterintuitive (you should focus your SEO on your own website, right?), by linking to these other properties wherever possible will help increase their perceived authority and rank.

Your Google properties and company website should always receive the majority of your attention. However, by thinking more broadly about your visibility in the SERPs you’re likely to increase your visibility and perceived trust, not just by Google, but by potential customers, too.

Source: SEW